Hello everyone! This page will include all the trending LLM and AI agent papers. If you are new to AI agent, please read the AI agent 101 then dive into this page!

Table of contents

Open Table of contents

Generative Agents: Interactive Simulacra of Human Behavior (2023/4/7) #link

Summary

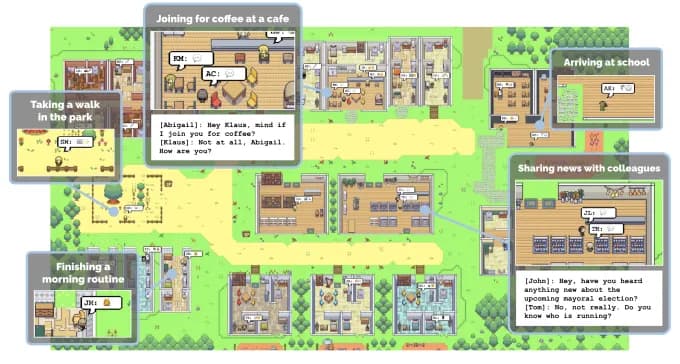

This paper presents the development of generative agents, computational entities designed to mimic human behavior realistically. These agents engage in daily activities such as waking up, preparing breakfast, and going to work, with artists focusing on painting and authors on writing. They exhibit human-like qualities by forming opinions, engaging in conversations, remembering past interactions, and making plans for the future. To achieve this, we propose an architecture that enhances a large language model with the capability to maintain a comprehensive record of the agents’ experiences in natural language, amalgamate these experiences into abstract reflections over time, and use this information to inform future actions.

We demonstrate the application of generative agents within an interactive sandbox environment, reminiscent of The Sims, allowing users to interact with a community of twenty-five agents through natural language. Our evaluation shows that these agents convincingly display both individual and collective social behaviors. For instance, from the simple premise of one agent wanting to host a Valentine’s Day party, the agents autonomously organize the event, extend invitations, form new social connections, and coordinate attendance, all without direct user intervention.

Our findings confirm that the distinct elements of our architecture—observation, planning, and reflection—are essential for creating agents whose behavior is perceived as believable. This integration of large language models with dynamic, interactive agents sets a new standard for simulating human behavior in a digital context, offering insights into the design of interactive applications that require authentic human-like interactions.

ReAct: Synergizing Reasoning and Acting in Language Models #link

Summary

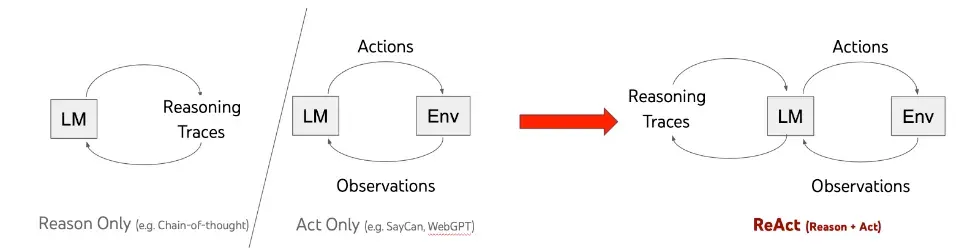

In this paper, we delve into the potential of large language models (LLMs) for integrating reasoning (e.g., via chain-of-thought prompts) and action generation (e.g., creating action plans) within a cohesive framework. Traditionally, these capabilities have been examined in isolation. Our proposed methodology, dubbed ReAct, interweaves reasoning and action generation, fostering a more effective interplay between the two. This integration allows the model to refine and adapt action plans based on reasoning outcomes and to address anomalies, while simultaneously enabling direct interaction with external resources like knowledge databases or real-world environments for additional insights.

We apply ReAct across various language processing and decision-making tasks, showcasing its superiority against leading-edge alternatives. It not only enhances model performance but also boosts human readability and trust, offering clear advantages over existing approaches that lack integrated reasoning or action capabilities. Specifically, in tasks such as question answering (using HotpotQA) and fact-checking (via Fever), ReAct mitigates common issues like misinformation and error amplification seen in models relying solely on chain-of-thought reasoning. This is achieved through interactions with a straightforward Wikipedia API, producing task-solving paths that are notably more comprehensible than those generated by comparison models without reasoning traces.

Furthermore, in interactive decision-making scenarios (examined through ALFWorld and WebShop benchmarks), ReAct outshines both imitation and reinforcement learning approaches, demonstrating remarkable improvements in success rates by 34% and 10%, respectively. This is accomplished with minimal guidance, requiring only one or two examples provided in context. Through ReAct, we illustrate the significant benefits of merging reasoning with action generation in LLMs, paving the way for advancements in how models understand and interact with the world around them.